Lean Poker Recap

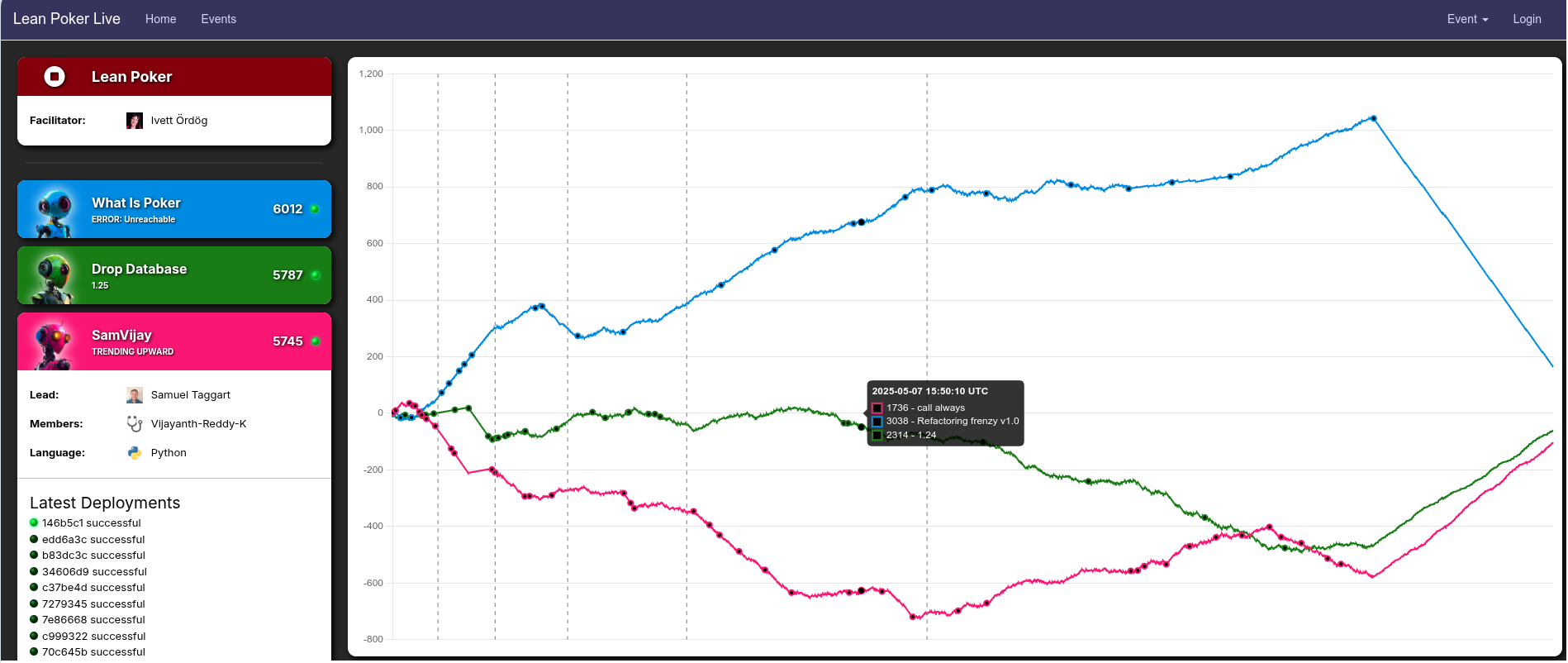

Recently I participated in Lean Poker. It's a workshop run by my friend Ivett Ördög. We talked about it a little on a recent The LabVIEW Experiment episode. It's a competition where each team has a poker-playing bot. It starts out basically just folding and the idea is to gradually add features to make it play better. There is a website that tracks each team's performance in real-time and allows the developers to look at individual games to determine how their strategy is working.

I was accompanied in the workshop by another LabVIEW Developer - Vijayanth. It was spread over 2 days. The first day it was just me and VIjayanth and the second day a guy named James joined us - he was a competitor the day before but his partner couldn't make the second day.

James' team was killing us the first day, mostly because they were just vibe coding with AI. Vijayanth and I were using Python, which I am somewhat familiar with. Vijayanth didn't know anything about Python or Poker, so at the very beginning, it was mostly just me coding while Vijayanth tried to gain his bearings. Eventually, he was able to contribute. In the end, we had a lot of fun and we learned a ton. It was not at all what I expected though.

Expectations Versus Reality

I went into it expecting to do all the traditional "good" software engineering practices - TDD, pair programming, CI/CD, etc. It didn't exactly work out that way, although I do have to say we did adhere to the main principle of small steps and quick feedback.

How the Game Works

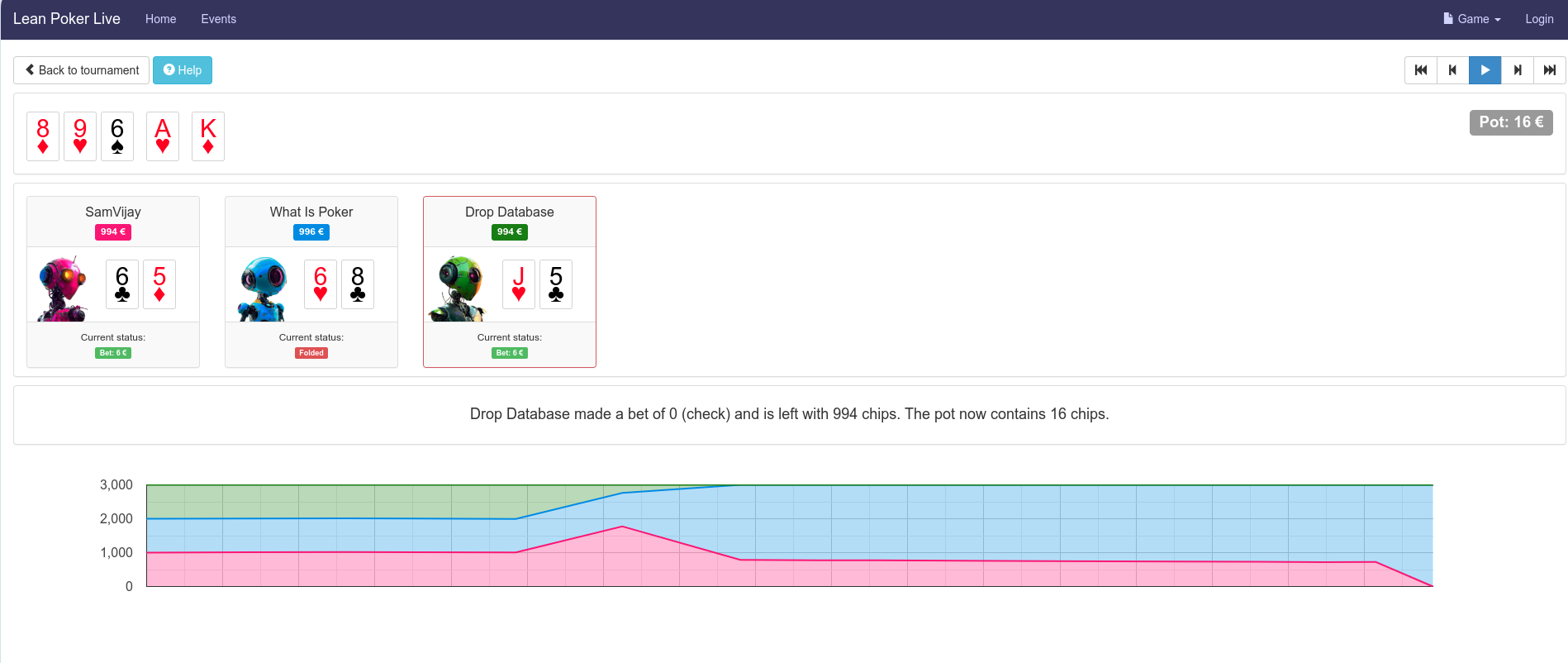

The game is Texas Hold'em style poker. Every team starts with 1000 chips. You play a series of hands until one team has won all the chips. You get some points for first place and second place gets a small handful of points as well. It repeats every few seconds.

On the programming side, when it is your team's turn to bet your bot receives an HTTP request asking how much you want to bet and you return an amount. Along with the request comes a chunk of JSON that contains the game state: the betting history, what cards are visible, your current chip count, etc. to help you make a better decision. From a practical point of view, all the HTTP stuff is already coded and you have a function that takes in a JSON blob called "game_state" and returns an integer bet value. Everything you do goes inside there.

You also get a separate web request at the end of every hand with the results of that hand - we didn't actually do anything with that information. We probably would have if we had more time.

Deploying your solution was quite simple. You pushed to a GitHub Repository which had a preconfigured CI hook that would deploy your code. Very easy.

A Good Start

Immediately I realized that by default every bot was calling/folding and that provided an opportunity. I immediately said hey, let's just change the output of the bet function to return 1000, which was the entire starting chip stack, so all-in. Everyone else is just checking by default and we'll win by default. We did that and it worked very well. It took our competitors a few hands before they caught on to what we were doing and then started doing something similar.

Our next simple strategy was to look at our cards pre-flop and just decide to go all-in if we had a strong hand (pocket pair or connected suited), bet if we had a decent hand, stay in if we had a high card, or just fold if none of those were met. Then if we made it into subsequent rounds, just keep betting. That worked well for a while too.

First Lesson

That worked well until we pushed something that broke the code. At this point, I was coding things by hand in Python and I screwed up some syntax - so our bot generated a run-time error which basically just caused us to forfeit almost every hand. The run-time error only happened on one code path, but it happened to be a popular one. I was really missing LabVIEW's type-safety. In LabVIEW what I did would have been a compile error and it would have been immediately obvious.

It took me a while to figure out what the problem was. The big lesson, which I didn't realize until the retrospective at the end was that while I was trying to figure it out we left the buggy code deployed. Looking at the graph at the end we lost a lot more than we should have there. We should have just immediately rolled back while I sorted out the syntax error. We also lost a lot of time here because we didn't really understand how to run our web service locally for testing.

Testing

Up to this point, we hadn't been testing anything. After this, we just had a simple test we could run. We'd spin it up locally and then we had a JSON file with a sample game state. We'd manually manipulate that, send it over curl, and look at the results. It wasn't perfect, but it was something.

Feedback

We really only used the testing for debugging. For the most part, we simply made changes, pushed them to production, and watched our numbers go up or down. If they went up, we moved on to the next optimization. If they moved down or we had an error, then we rolled back. We would look at the individual hands to see why we were losing. If we had an error we could edit our JSON file and send it to the server locally to see what we got back.

Generally, we would determine the next optimization by looking at the hands that we were losing within each game and attempting to determine why and a strategy to mitigate it. There is a web UI that lets you step through each hand.

- Deploy

- Look at the results

- Roll back if bad

- Look at losing hands to determine the next "feature" or figure out the cause of our bug.

That was basically our strategy for the rest of the workshop. We were able to iterate pretty fast.

Using AI

The second day, when James joined us, we were solidly in last place. James and his team had been using TypeScript. He didn't know any Python. So he wasn't a ton of help on the programming side - although he was able to use an LLM to find some problems and suggest some fixes.

At this point, we hadn't gotten much strategy beyond the opening round of betting. I realized that we needed to look at all available cards and determine what our best hand was and what the best possible hand was. I knew I could probably write that. However, it would take a while and would likely be error-prone. So I decided to vibe code it. ChatGPT did not disappoint. In a few minutes, it spit out a function that seemed to work. I briefly glanced at it and it looked correct. More importantly, when we added a little of our own logic around deciding when to bet (based on our hand and the best possible - we wrote that part ourselves) and deployed it, our numbers started to increase drastically.

Then we just went through a series of tweaks on how aggressive we wanted to be. We made a simple change and pushed it. We then watched the numbers and would adjust if needed, often by just rolling back and then trying a different tweak.

Interesting Observation

That worked for quite a while but eventually led to an interesting observation. Things were going well. We pushed a change and things started going downhill. So we rolled back, expecting things to go back uphill again, but they didn't. They kept going down. While we had reverted our change, one of our competitors had changed their algorithm, which meant that our original algorithm that was working fine no longer worked. I found it interesting that our success didn't just depend on what we did but also on what our competitors did - kind of like in business...

We needed more vibes

The last major lesson I learned came up in the final retro. When James left his team, Ivett took over that team. She was also doing some vibe coding but at a higher level. She asked the AI to write an algorithm to pit the lowest 2 teams against each other (ie only bet when she was head to head with only 1 of us). That let us take each other out. She also asked it to try to help out whichever team was behind by letting them win and only betting aggressively against the one that was ahead. That worked really well.

What I realized was that I was only asking AI to solve a small part of the problem (ranking hands) and I was writing the betting logic based on the ranks. I should have asked AI to solve the entire problem.

Ivett did say that using AI did build up a lot of technical debt and she did spend a lot of time at one point refactoring so it's not all fun and games. However, the AI team did win. As skeptical as I still am of AI in general, in this particular case it did prove useful.

A LabVIEW Version?

If any of this sounds interesting I would love to organize something like this in LabVIEW. Maybe we could do it in person at a conference or via Zoom. Ivett is interested in hosting something like this in LabVIEW. The catch is she is not a LabVIEW programmer, so we'd have to write a LabVIEW Webservice. I think it could be done. The biggest challenge is the deployment part, but again it's probably doable. If you would be interested in participating in such a workshop and/or working on the webservice, use the button below to reach out.