How I use Test-Driven Development (TDD)

A lot of people out there are very dogmatic about Test Driven Development. There’s a lot rules and a lot of very strong opinions. If you don’t believe me, go check out Twitter. While on Twitter, I saw GeePaw Hill’s Ten I statements about TDD. It inspired me to write a little bit about how I view and use TDD.

I view TDD as a design tool.

For me, the main point of writing the tests first, is not to amass a bunch of tests, but to use the tests as a design tool. The tests themselves are nice. They certainly make refactoring safe, but that is not the point. The tests drive my design. I try to write just enough code to make the test pass. Then the real design happens in the refactoring phase. I feel like patterns just become obvious when I look at the code. Often they are things that I don’t think I would have found easily just staring at the problem. I generally start out with some idea of what the end design will be, but I try to not hold onto that too tight and let the tests guide me.

I think the idea of just simply passing out a constant to make a test pass is lame.

When it comes to writing just enough code for the test to pass, some people take it to the extreme. They write a test and then have the code output a constant that is the expected response and then refactor from there. For whatever reason I have a visceral negative reaction to that. I try to avoid it. I try to write some minimal amount of code. Often, if I have a particular solution in mind, I’ll just write that right away. However sometimes, when I am really stuck I do sometimes use a constant, but it’s pretty rare. Generally dropping a lot of constants is a sign that you need more tests to force you into a better design.

I use a testing todo list.

Before I start writing any tests, I typically open up vim and start making a checklist of all the tests that I think I’ll need. It’s generally a living document. I’m constantly editing it and adding to it as I think of special cases. I check the test cases off as I implement them. Sometimes I check this into git, sometimes not.

TDD helps me avoid debugging.

I think debugging is often a big time sink. We spend a lot of time searching for bugs. Fixing them generally isn’t the problem, it’s usually the searching that is time consuming. Doing really short TDD loops helps me to avoid that. I write a test and then write some code to make it pass. If the test fails, I don’t have to bother debugging to figure out why. I take a quick look and if it is not immediately obvious what’s wrong, I just throw my solution out and try again. Because my loops are so short (I try to keep them under 5 minutes), throwing away such a small amount of work is no big deal.

TDD helps me write better code faster.

TDD allows me to write better code faster. I say the code is better because it’s tested and I know it works. Also, all the things that make code testable coincide with good design principles, so I know I also have that going for me. TDD helps me go faster because as mentioned above it helps me avoid a lot of debugging. It does feel slow at first, but once I get a rhythm going, it picks up quickly.

I sometimes write the API for the code I want to test first.

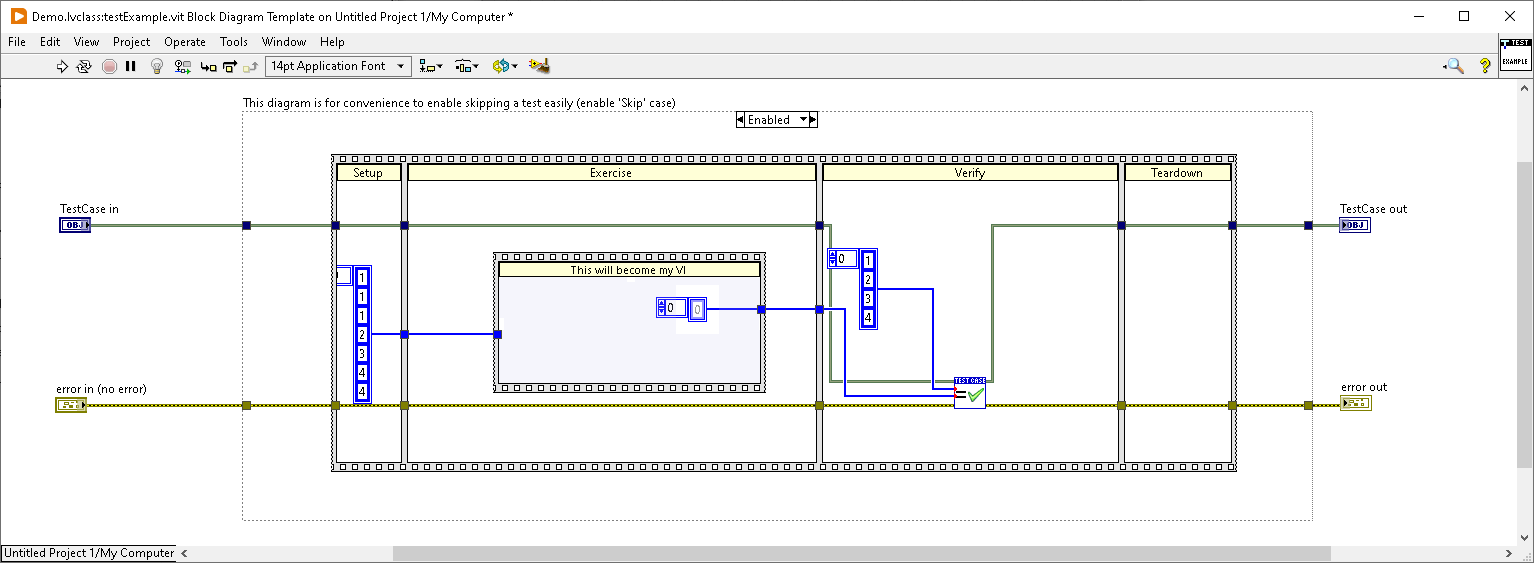

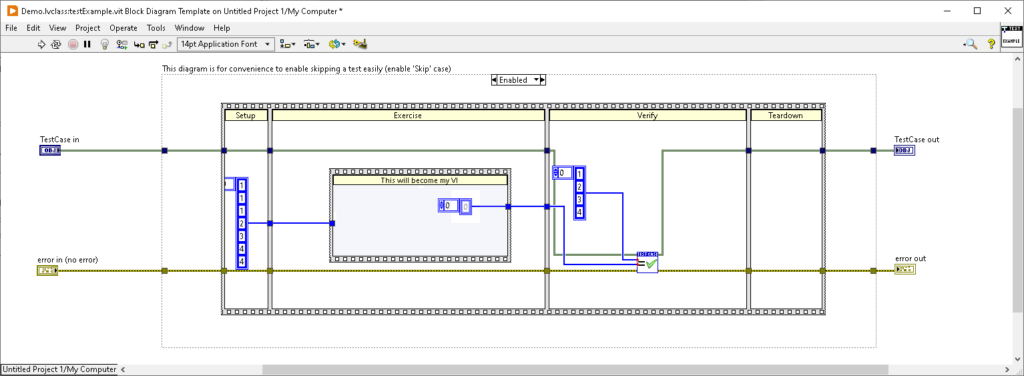

They say you are supposed to write the test first. I struggled with that a lot when I first started in LabVIEW. I went through a phase where I would write the API method first and then write the test. In those cases, I considered it a draft API and I was not afraid to change it as I learned more about the problem. Lately, I’ve been getting away from writing the API first. I’ve been writing the test and just dropping a sequence structure in it. I wire the inputs into the sequence structure and drop constants inside it that I wire out for the outputs. Then once I have the test written and have watched it fail, I highlight the sequence structure and turn that into a subvi. It is a slight pain because the subvi ends up in the test class, so I have to go find it in the project and drag it around and redo the icon. I also then have to remove the sequence structure (QuickDrop makes this easy). I still do it this way though. For some reason, I like the ritual of starting with the test. Besides, even if I started with creating the method first, I’d have to switch back to the project anyway so I feel like it’s a wash. On those cases when I do write the API first, I certainly am not afraid to change it as I learn more about the problem.

I sometimes write production code without writing tests.

As much as I like TDD sometimes I don’t always do it. There are some things that are just really hard to write unit tests for. In those cases I rely on integration tests. Now it is interesting to note that sometimes the fact that it is hard to test is a design smell. It tells me that I haven’t found the optimal design yet. It’s worth spending a little investigating that before I write off starting with a test.

I wrote a mocking framework for LabVIEW and barely use it.

A lot of people talk about Mocks. When I first learned about them, I wanted to play around with the idea, so I wrote a Mock Object Framework for LabVIEW. As I learn more, I’ve gotten away from using them. A lot can be done with the humble object design pattern to avoid the need for Mocks. I’m sure they have a place. I’m just not entirely sure how they fit in yet.

I do practice.

I do TDD katas. Not all the time or any regular schedule, but when I find some free-time, I do practice. It is very useful for building good habits. I am currently taking JB Rainsberger’s course on TDD, and I’ve taken some other courses on Udemy. I also do the Advent of code every year. I’ve been doing it in Python, because I would like to learn Python. Maybe this year I will do it in LabVIEW. It is a great exercise for TDD. They literally give you a set of test cases in the problem description. If you want to learn TDD start there.

I sometimes delete tests.

Yes, I often delete tests. Sometimes as I add more features to my production code, I realize that a new feature relies on some existing feature working properly. So in testing this new feature I am inherently also testing the existing one. So often I get rid of those redundant tests. Sometimes I keep them around as examples.

I refactor tests just as rigorously as production code.

I spend almost as much time refactoring tests as I do production code. My tests make heavy use of Test Utility methods and custom assertions. They make my tests much easier to read and make it quick and easy to add new tests. It can also help with debugging. On a recent SQLite project, I added a setup VI that had a flag you could toggle to use an in-memory database for speed or use a new file for each test. For normal testing, I just used in-memory. If a test failed, I reran that test with the flag set and then I could see the exact contents of the database. This made debugging very easy.

I often don’t write unit tests for AF or DQMH code.

When writing AF or DQMH code, I try to keep the logic separate from the messaging framework. If I need a logger, I write a logging class that I can test serially. Then I wrap it in a DQMH module or Actor. I use the DQMH API testers or my AF Tester to verify that the right thing happens when I send the message. Then I rely on integration tests for the rest.

I get bored if my tests take more than 10 seconds.

I work very hard to keep my tests under 10 seconds. If it takes longer than that I get distracted by e-mail or start doom-scrolling on Twitter. That defeats the purpose. If my tests fail I want it to be fresh in my mind exactly what I did and what I was trying to accomplish. If it starts taking longer than 10 seconds, I start trimming redundant tests and look at ways to try and speed up the remaining ones.

Almost every test I write starts from copying an existing test.

I know all about DRY and the dangers of copying and pasting. However, I often find that I have the same setup but different inputs. It’s much easier to just copy it. Also, I like to have everything explicit. Sometimes that means duplicating things. If I find it too annoying, or that it distracts from understanding the test, I will throw the duplicated code into a Test Utility method. If the tests are similar enough, sometimes I will do a parameterized test, where I create arrays of inputs and run them through a for loop.

I run my tests as a pre-commit hook

I like to set up a pre-commit hook in git to run my tests every time I commit. That prevents me from accidentally committing something that doesn’t work.