Head in the Cloud

This book is a few years old. The question is still valid though - why learn anything when you can just ask AI?

I bought this book a year or two ago and finally got around to reading it. I found the premise intriguing - How has such easy access to knowledge and information affected our society and the way people learn? The book is several years old, yet it is still relevant. When it was written, people were worried about the effects of Google and YouTube. Today, they are more worried about AI, but the concerns are still pretty much the same: How much should we rely on these sources of knowledge? How much of our memory and thinking should we outsource to them? What are the potential downsides?

The Value of Broad Knowledge

The book advocates for a broad knowledge. It cites a bunch of studies that show that broad general knowledge (trivia) correlates with higher income and better health outcomes. Now, of course, correlation does not equal causation. The author talks a lot about how the experiments were conducted and different ways to control for various factors, and at the end, it is pretty clear that if you want to be successful, developing a broad base of knowledge can be useful. I've written about this before.

The Renaissance Man

I have long been a big fan of the idea of the Renaissance Man. You look at people like Laplace and Lagrange, and their names show up in various different fields. They didn't just study one thing. I also remember my advisor for my Master's, Dr Mao. He was an electrical engineer, but he studied all kinds of other subjects and tried to connect them together. One of my favorite classes was Neuroscience and Control Theory that he taught in conjunction with one of the Biology professors.

The idea of a well-rounded liberal arts education (and education in general) gets blasted by populist MAGA, however, it makes a lot of sense to have a broad education. Most breakthroughs come when someone brings an idea in from the outside. People who are too close to a problem often get blinded by the conventional wisdom in their field. Bringing in someone with a different perspective can break that thought process. Particularly, this is why DEI is so critical in spite of all the right-wing criticism.

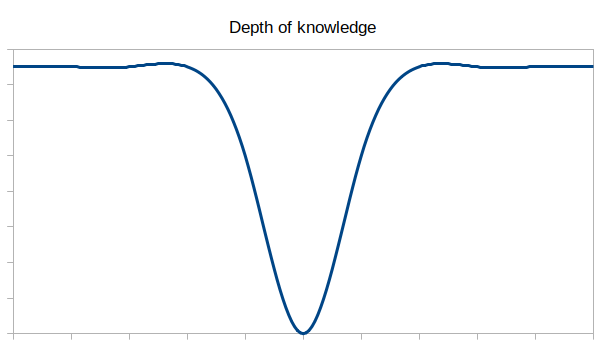

Here is an excellent article by Martin Fowler et al. It talks about the value of having more than one area of deep knowledge.

You can't Google what you don't know

The last line in the book is a very key point in that you can't look up what you don't know to look up. As an example, imagine a person who liked eating mushrooms, and never knew that some were poisonous. If they were walking in the woods and saw a mushroom, it wouldn't occur to them to google if this particular mushroom was poisonous. If you don't know what a 401K is, you aren't going to google "How much should I put in my 401k?" To tie it back to programming, if you were writing a web service and didn't know anything about cybersecurity or SQL injection, you might not know to google how to sanitize your inputs before sending them off to a database. In all cases, you need to have a general sense that the issue exists before you can google how to solve it.

You can't vet AI answers if you know nothing about the subject

Similarly with AI, you need to know how enough to ask the question, and you also need to know enough to know if the answer makes any sense at all, since AI is known to hallucinate otherwise known as randomly making shit up. With AI, the problem is even worse than it just making things up, because it is supposed to sound like it makes sense, and it is always confident. If you know nothing about the subject you aren't going to know the right questions to ask and you aren't going to know when it is just making shit up.

Technical Sophistication

I stumbled upon this series of programming books called Learn Enough X to Be Dangerous. The author used the term technical sophistication. The basic idea is to have a good overview and to know what is worth it to pay attention to and what you can ignore for now. There is also a pragmatic side of not going too far down the rabbit hole and trying to understand everything. It's also about knowing how to learn and how/where to Google and find stuff. I would call it technical intuition, and I think it is a very underrated skill. I thought the idea of technical sophistication dovetailed with this book quite well since both are about knowing what's worth memorizing and what is worth googling when you need it.

Here is an interview where I talked with Clare Sudberry a little about "How much do you really need to know?" It also seemed relevant to the discussion.

Overall Opinion

My overall opinion is that Head in the Cloud is a very entertaining book. Well worth reading. It is still particularly relevant today. My key takeaway was to continue to be curious (one of Martin Fowler's points in his article) and to not rely on AI to do my thinking for me. I kind of relate this to using a calculator. I still try to do as much math as I can in my head. Even when I use the calculator, I try to predict at least the order of magnitude of the answer. I don't just blindly trust it. Typos are possible, or in the case of AI, it is literally nothing but hallucinations; some just resemble reality enough to occasionally be useful.