Cycle Time

Getting quick feedback is very important. Here's a story of what can go wrong when you don't.

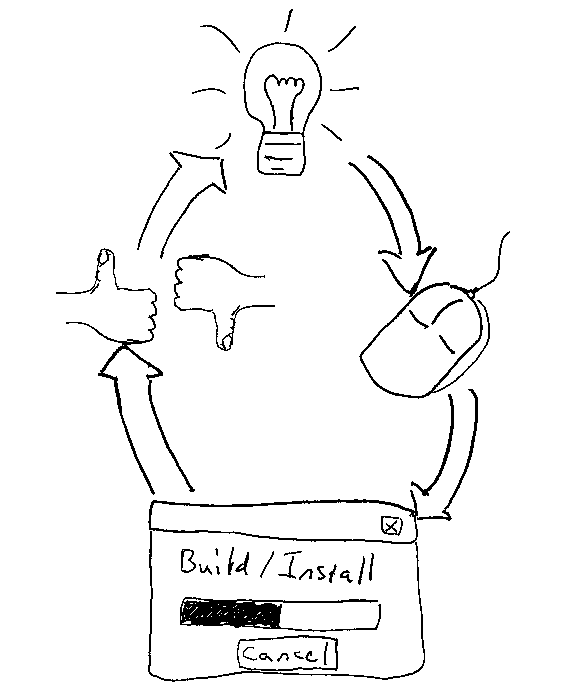

If you hang around agile circles enough you hear a lot of talk about cycle time and getting fast feedback. What exactly does that mean? There are various opportunities for feedback in software development. If we are practicing TDD, we can get some immediate feedback in terms of do our tests pass or not. If we have some CI automation set up we can get feedback from your test and build process. Those all help verify that we are building "the thing" correctly, but how do we know we are building the correct "thing"?

That last step is actually really important. No matter how good your requirements gathering process is or how much you interact with the customer, there will always be a difference between what you build and what the customer wants or needs. The only way to identify that difference is to get the software into the customer's hands so they can give you feedback.

Why is this important?

Fast feedback is important because defects are easier to fix the earlier they are found in the process. If you are doing TDD and you make a typo, as soon as you run the test' you'll know you made a mistake and you'll know exactly where to look. If somehow a bug escapes TDD and gets caught immediately by your CI pipeline, it should still be relatively easy to find the problem - its whatever you were working on 10 minutes ago. If your customer finds the bug in production then it is much harder. You have to sort all the changes that went into that release to find the bug. That is expensive and time-consuming. Faster feedback through smaller more frequent releases makes that process less painful.

An Example

Here's a real-world example of what can happen when your cycle times are long. I've been doing some consulting with a client lately. They are working on a large legacy project. The build time was 3 hours. Before I joined them they decided that was taking too long, so they decided to automate it. That's a good first step. So they set up a build server and Jenkins and got that automated. Every night Jenkins would build their executable and let them know if the build failed. So far so good.

The Jenkins process stopped at the build step. It never tested that the executable worked, simply that it built without an error. They were also not doing frequent releases to the customer. They would work on features for several months and then when they got a set of features done the customer would upgrade. It had been several months since they had done a release.

The problem

We all sat in a meeting: the client, myself, and another consultant who they had hired as well. We're sitting there and I started asking some questions. I asked about testing and somehow it came out that they had a test machine, but that they were running source code on it and not the executable. I asked why. They mentioned that the executable built, but wouldn't run. It had been that way for several weeks. They hadn't fixed it because they were under pressure to add new features. The other consultant and I both looked at each other and said "We need to fix that now."

So there were really several problems going on here. First, their feedback loop was really long. If they made a change in the morning, they had to wait until at least the next day to get feedback. Jenkins would do the 3-hour build overnight and then the next morning someone would manually install the build on the test machine. The other problem was that they got distracted by adding new features and ignored the feedback that they were getting. Somewhere along the way they realized that the build didn't work and didn't prioritize fixing that.

The solution

It turns out that the executable was calling a bunch of code dynamically. Several of the dynamically loaded modules were missing some files that needed to be included in the build. The fact that there were several missing, made the whole process take even longer.

Of course, we didn't know any of this at the beginning. We just had a LabVIEW error message that kind of pointed us in that direction. So we had to gather some information first. We figured out how to turn on the detailed logs and keep the zip file around and some other tricks to gather information from the LabVIEW build process.

That confirmed that missing dependencies were our issue, but it didn't confirm exactly which ones. So we started a binary search of removing half the dynamic dependencies at a time and looking at the results. Because several dynamic modules had issues our binary search method wasn't super effective and required many cycles.

The biggest challenge was due to the 3-hour build time. We could only do 2 builds per day. The engineer doing the troubleshooting would come in the morning, analyze the previous night's results, and come up with a plan. He'd make a few changes. That process would take about an hour. Then he'd kick off a 3-hour build. He'd come back around lunch, analyze the results, and come up with another plan. Then he'd kick off a build that wouldn't finish before he left for the day. The next day he would repeat. The whole process took a month to resolve the issue.

Dodging a bullet

I would argue this customer dodged a bullet. In the end, it took a month to resolve the issue - that is not good and it could have been worse. What if the customer had decided that they were ready for the next release or if there was some new feature they desperately needed right now? It still would have taken them a month to fix that issue. It may have even taken them longer because they would have kept building onto the code that already had problems.

I would also argue that it would have taken much less time to fix if they had stopped immediately and fixed everything as soon as the executable stopped working. They would have found that they needed to take care when adding new dynamic modules to make sure everything was included. They wouldn't have ended with multiple modules with missing dependencies that needed to be sorted out.

Lessons

So what lessons can we take from this? Here are a few:

- Do more frequent builds. Every night is good, but every commit is better

- Automate testing that your exe actually launches and runs.

- Do everything you can to lower build time and total cycle time.

- Don't ignore the feedback you do get. The priority should be to immediately fix the pipeline every time it breaks.

Those are the major lessons I saw. I do see others. What lessons do you see?