Can Your Code Be Too SOLID?

You can have too much of a good thing. Lots of code is hard to read because it uses too many unnecessary abstractions.

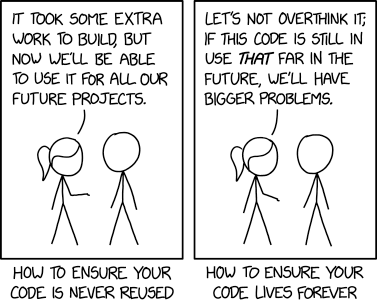

First a little bit of humor from xkcd.

This comic pokes fun at both extremes when it comes to future-proofing our code. On the left, the main character has put a ton of effort into futureproofing her code. She's thought of and implemented all the possible ways in which it could be expanded. The downside is that it is so complicated that no one will understand it and therefore it will never get used. On the right, she has not put any real effort into future-proofing the code, with the thought that it is just a proof-of-concept and will die and that if it does happen to live someone else will refactor/rewrite it. If you have been around long enough, you have probably seen both extremes.

One Man's Abstraction Is Another's Obstruction

This is a quote from Norm at the first GDevCon N.A. I was reminded of this by a recent post on LinkedIn - see the link below. Basically, the author was complaining about code with too many abstractions. He went on to talk about people treating SOLID and design patterns as goals, and not as tools to achieve better code. He also brought up the idea of reversible decisions - ie things that are easy to change later - and how we should take advantage of that to avoid adding unnecessary abstractions.

The Problem With Too Many Abstractions

So what is the problem with too many abstractions? Abstractions are supposed to simplify our life and make things easier to understand. In moderation they do, but when overused they confuse and muddy the water. Abstractions make things easier to understand by hiding details. That's great if everything works and you don't care about the details, but sometimes you do. Clicking through one level of abstraction to get to the details is reasonable, but clicking through many layered abstractions can often be confusing.

We constantly have to weigh the cost of the abstraction with its benefits. The cost is that it can potentially confuse readers. The benefit is that if the abstraction is well-understood it can make comprehension easier and it allows for future expansion. Allowing for future expansion is the sticky point. Is that future expansion actually needed? How do you know? Did you look into your crystal ball?

I have inherited lots of code with too many abstractions. Almost always the abstractions were there to allow for future features that never got implemented. Those abstractions may have made sense to the original author. In my case, I was new to the project, I didn't have that same crystal ball and no one had written down all the potential future use cases. At that point, the excess abstractions became obstructions. They made it unnecessarily difficult for me to understand the code and for what benefit? Some imagined future?

Why We Sometimes End Up With Too many Abstractions

So how do we end up with too many abstractions? I think it boils down to 2 main reasons. The first one is just that it is part of the normal developer arc. The second is that refactoring is a skill that not everyone has mastered and that is overly difficult in LabVIEW

The Developer Arc

I've written about the developer arc before. Basically, we all start out writing unmaintainable code because we don't know any better. Then at some point. we code ourselves into a corner and realize that SOLID or design patterns can rescue us from that corner. We become evangelists and start using our new hammer everywhere. At some point we get confused and the hammer becomes the goal and not the tool. Eventually, some of us take a step back and realize our folly and revert back to chasing simplicity. A lot of developers get stuck in that middle stage where the hammer becomes the goal. That hammer leads to creating lots of unnecessary abstractions.

Refactoring in LabVIEW

Another reason we get stuck with too many abstractions is that LabVIEW developers are generally not comfortable with refactoring. This inability to refactor leads to Big Design Up Front (BDUF). We are so afraid that we won't be able to refactor things later that we feel we must get everything right up front and that means that we must add abstractions for every possible expansion vector. This leads to lots of abstractions to account for future features that will never be implemented.

The lack of refactoring in the LabVIEW Community is due to 3 main reasons: lack of skill, lack of safe refactoring tools, and lack of unit tests.

There is a lack of skill in refactoring skills in the LabVIEW community. Refactoring is a skill. It needs to be taught and practiced. Unfortunately in the LabVIEW community, no one really teaches or practices it. It's briefly mentioned in some of NI's advanced courses, but that is it. It's hard to practice because refactoring katas require existing code and not much of that exists for LabVIEW. We need more katas and courses.

There is also a lack of safe refactoring tools in LabVIEW. There are a few built into LabVIEW such as create subvi or replace cluster with class. The real missing piece is class refactoring tools. Simple things like renaming classes and dynamic dispatch methods are difficult not to mention more complicated things like extracting interfaces. There is no reason these can't exist. There are several third-party scripting tools out there that address some of these problems, so it is possible. The problem is these tools are spread over many different packages and not discoverable, so not many LabVIEW Developers know about them. We need better safe refactoring tools for classes built-in to the LabVIEW IDE. Here is a comment about class refactoring in LabVIEW.

The last reason there is not a lot of refactoring going on in the LabVIEW community is the lack of unit testing. There are a variety of reasons. One reason is that we deal a lot with Legacy Code that has no tests. Another is that no one is really teaching unit testing (outside of us at SAS Workshops). NI touches on it in their advanced class, but barely. They treat it as some advanced skill only for architects. Without unit tests and without safe refactoring tools built into the IDE it is really hard to make significant changes to your code, which takes us back to the BDUF problem.

Simplest thing that could work

Back to the LinkedIn post. My original response to that was to stop chasing SOLID and design patterns and do the simplest thing that might possibly work. Use Test Driven Development. Start with a failing test. Write the simplest thing that could make the test pass and then refactor. If you happen to see an opportunity to apply one of the SOLID principles or see a design pattern emerging on its own, then great - use that! But don't try to force it. I truly think simplicity trumps any SOLID principle or design pattern. Remember they are just tools for achieving easily maintainable code, not the goal in and of themselves. Simple code will always be easier to maintain. If the code is too simple to add the feature you want, then refactor it first. Don't make your code harder to understand and maintain by prematurely adding all these unnecessary abstractions - or perhaps I should call them obstructions.

Easy to Change?

I did get some pushback. Someone amended my "Simplest thing that could work" and added "can also easily be changed" to it. On its face, I kind of agree with that idea. The main reason to use software over hardware is that it is malleable and easy to change. Part of the question is where does the easy-to-change part come from? Does it come from the software design itself? or does it come from our own ability to refactor? I believe it comes from our refactoring skills, not our design. Once we have mastered refactoring, we can use our refactoring skills to change the design to make it easier to extend. We don't have to put the extension into the design upfront.

Fortune-Telling

Putting the extension into the software design upfront so that our software "can also easily be changed" requires some fortune-telling. I have had all of the following extensions on projects:

- Add more of the same instrument

- Add more channels to an existing instrument

- Add the ability to apply settings on a per-channel instead of a per-instrument basis

- Add completely different instruments with different measurements

- Add support for new file types (csv, json, tdms)

- Add database support or support for a different type of database

- Add Alarming

- Add new calculations

- Add new report types

- Add more metadata to files or reports

- Add new visualizations

I don't believe it is possible to design software that can account for all these, and you certainly can't account for the ones that haven't made the list simply because you haven't encountered them yet. So if you are going to add flexibility to your design you have to pick and choose which ways it should be extensible. How do you know which ones to pick and choose? Which ones are actually necessary? You can't tell at the beginning of your project. I guess you have to talk to your local fortune teller. Keep in mind that each abstraction you add that never actually ends up being needed is just an obstruction.

Best time to add an abstraction

I'm not saying you should never add abstractions. I am saying you shouldn't add them until you need them. Once you have a new feature you are adding that would benefit from that abstraction, then refactor to add the abstraction as the first step in adding the feature.

Reversible Decisions

The original LinkedIn post mentioned reversible decisions. I equate that with refactoring. The better our refactoring skills the more decisions that are reversible. Reversible decisions mean there are fewer things we have to get right up front. This gives us the freedom to do the simplest thing that could possibly work without worrying about expansion later. We know that we can always refactor before expanding in that direction, whichever direction it happens to be.

Encapsulate but don't abstract

One place where I kind of blur this line is encapsulation. I use encapsulation a lot with hardware devices, files, communications buses, and databases. While it would be easy to drop some Keithley driver from the Instrument I/O palette for talking to a DMM, I often encapsulate it in a class. I find that brings a lot of benefits beyond the extensibility provided by OOP. The thing I don't do though, is I don't immediately create an interface or abstract class around it. I know I can do that refactoring step easily later if I need a different DMM. Having a single class over the driver provides just the right amount of abstraction. I feel like adding another abstraction layer in terms of an interface just complicates things unnecessarily and that it is an easily reversible decision, so I push it off until later.

What do you think?

What is the right amount of abstraction? Can you have too much? How do you balance flexibility with ease of understanding?